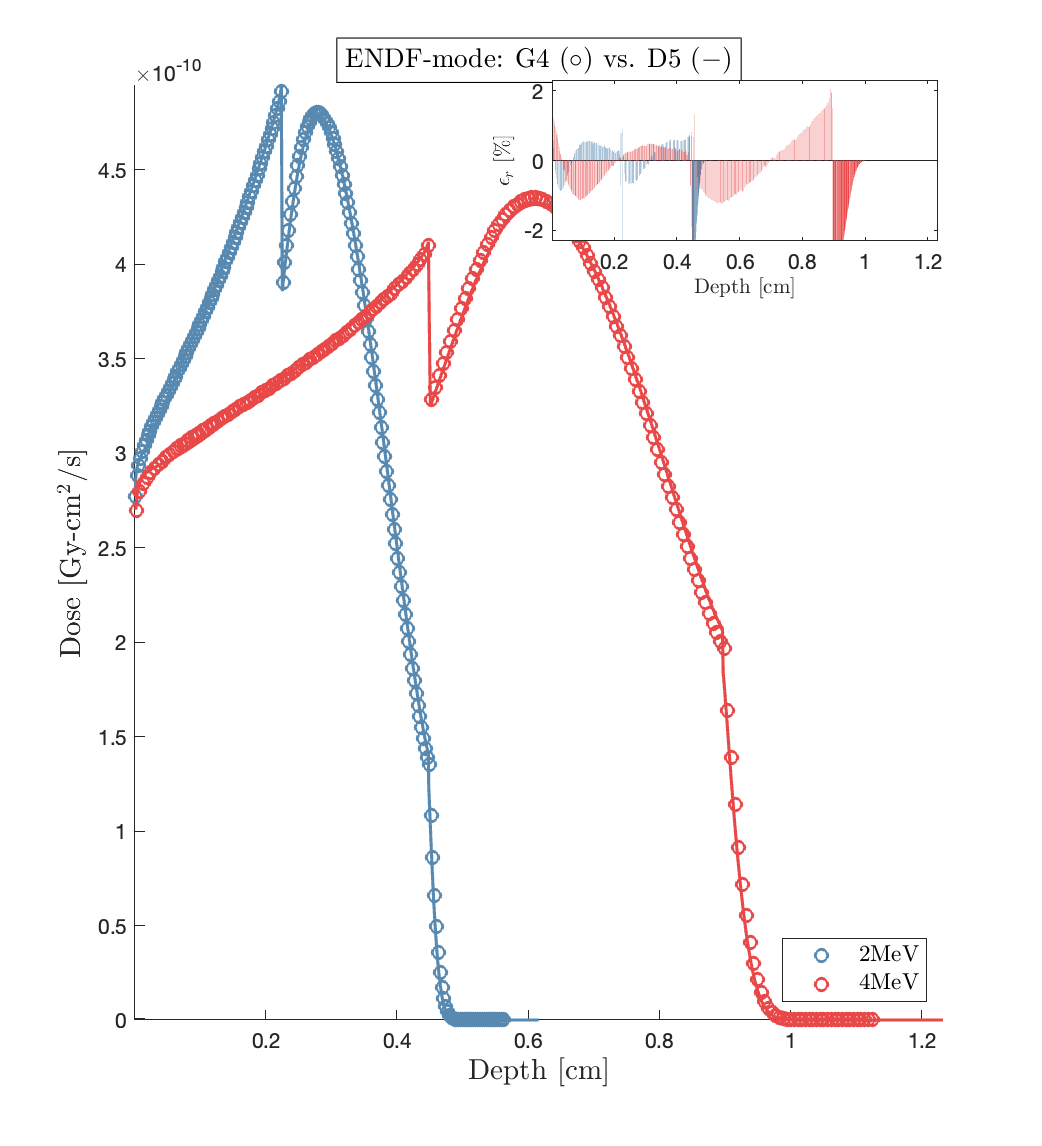

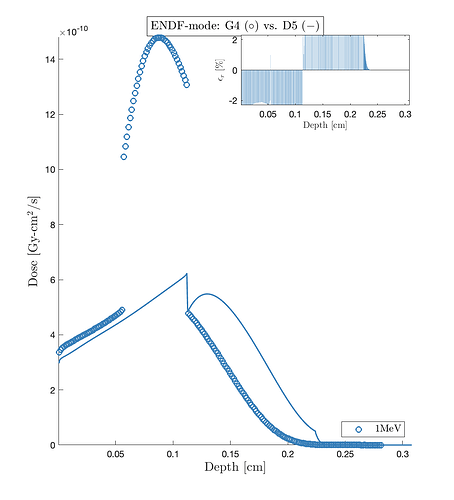

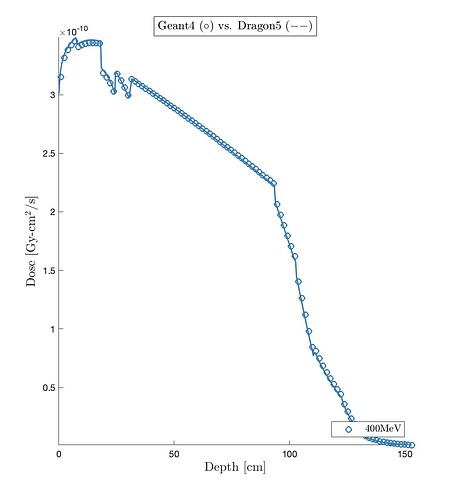

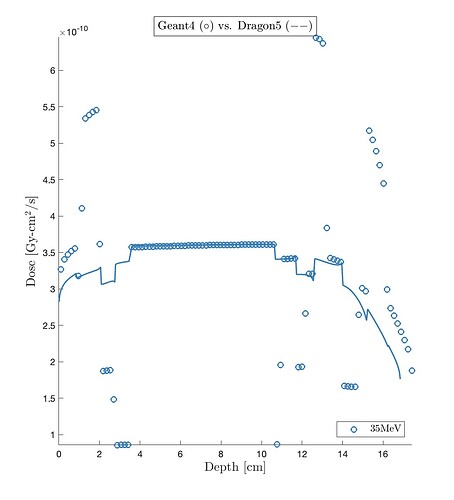

I have already run several thousand Geant4 calculations between 1 MeV and 30 GeV for 107 benchmarks. The goal was to validate a reference deterministic nuclear reactor code for electron transport. The Geant4 results were very satisfactory, in a high compliance level with respect to the deterministic solver. Recently, for some reasons, I had to reinstall Geant4.10.7 and Geant4.11.2 on clusters and supercomputers, and retake all Geant4 simulations from scratch. Suddenly, the Geant4 simulation became unstable. For several beams, I was able to get the usual desired result very well. For others, Geant4 shows abnormal failures. These new simulations were multi-threaded. I decided to reinstall everything in single-threading, and the problem persists. Geant4 succeeds for ~60-70% of the beams and fails for the rest. The Geant4 failure is completely random and varies from one cluster to another. Do you have an explanation for this? Is it a problem related to the Geant4 installation? My benchmark shown here is a slab of water, followed by a slab of aluminum, a slab of steel, and then another slab of water. Fig1 shows a typical case of Geant4 success (e.g., 2MeV and 4MeV). Fig2 shows a typical case of Geant4 failure (e.g.,1MeV). Both figures are for the same benchmark, the same Geant4 application, the same classes and headers, the same calculation scheme, the same PhysicsList, and the same number of events, executed on the same cluster. The circles show the dose predicted by Geant4. The continuous lines show the dose predicted by the deterministic nuclear reactor physics code, Dragon5. The simulation is purely electron-based with a unidirectional electron beam. A StackingAction is applied to kill gamma photons for reasons related to electron library validation. All our published data in the literature are based on this functional StackingAction, and we have taken the effort to adapt it to multithreading.

The results shown here can be reproduced with these configurations:

Geant4 Version: v10.7.p02

Operating System: AlmaLinux 9.3

_Compiler/Version:_gcc/9.3.0

_CMake Version:_cmake/3.18.4

Geant4 Version: v10.7.p02

Operating System: Rocky Linux 8.9 (Green Obsidian)

_Compiler/Version:_gcc/9.3.0

_CMake Version:_cmake/3.18.4